Life and deeds of PAL video signal

A progressive PAL video signal is actually quite simple. A single PAL frame has 312 lines and the lines have the following structure. The first 5 lines indicate the start of a new frame and they provide the necessary vertical sync signals for the monitor to sync to. After that the next 304 lines contains the visible image, although some lines, typically the first 20 lines at the top and last 20 lines at the bottom, are clipped off by the monitor. The exact number of clipped lines depend on the monitor or TV. Finally after the visible image comes 3 lines that again contain vertical sync signals and tell the monitor to jump back to the top of the display.

Each PAL scanline is exactly 64us long. The sync lines are made of a series of long and short pulses. A long pulse is 30us low followed by 2us high state. A short pulse is 2us low followed by 30us high state. These pulses are used to generate the sync signals as follows:

Line

1 Long Pulse Long Pulse

2 Long Pulse Long Pulse

3 Long Pulse Short Pulse

4 Short Pulse Short Pulse

5 Short Pulse Short Pulse

6-309 Visible lines

310 Short Pulse Short Pulse

311 Short Pulse Short Pulse

312 Short Pulse Short Pulse

Every visible line starts with a horizontal sync pulse for the monitor. The HSYNC is 0V for 4.7us. The HSYNC is followed by a "back porch", which is 0.3V for 1.65us. In case of a color signal, a special color burst signal is generated during the back porch, but since we are at the moment dealing only with black and white images, we can skip this detail. After the back porch the remainder of the scanline contains luminosity data in range 0.3V (black) to 1V (white).

Since I'm using a ATmega1284P microcontroller which can only output digital values that are either 0V (low) and 5V (high), how can I generate the needed voltages? For black and white image, the needed voltages are 0V (HSYNC), 0.3V (black) and 1V (white). The crucial point to understand is that there is essentially a 75 ohm resistor inside the monitor which terminates the composite video signal to ground. This is called the input impedance and the value of 75 ohms is determined by the PAL standard. With this information it's simple to come up with the following circuit:

SYNC, VIDEO and GND coming from left, monitor on the right.

The 1K resistor and the 75 ohm "resistor" inside the monitor form a voltage divider. When the SYNC signal is high, the monitor receives the following voltage: 75 / (1000 + 75) * 5V = 0.35V. Similarly the 470 ohm and 75 ohm resistor form another voltage divider that sets the voltage level at the monitor input to 75 / (470 + 75) * 5V = 0.7V when the VIDEO signal is high. With different combinations of SYNC and VIDEO values we can generate the voltages 0V, 0.35V and 1.05V. Close enough to what we need!

The lost art of cycle counting

So, to generate a PAL frame we need to change the values of the two output pins SYNC and VIDEO very fast. These signals will get converted to proper voltage values by the two resistors. But how fast exactly do we need to change the pins, or "bitbang" them? Well, quite fast for a microcontroller running at 16 Mhz... A single scan line is 64us long and a MCU running at 16MHz has 16 clock cycles per microsecond. Therefore during a PAL scanline we have 64*16 = 1024 cycles. In 1024 cycles we have to generate the HSYNC pulse, the back porch pulse and the visible pixels. That means there's only time for a couple of clock cycles per pixel!

In the console project, I used a timer interrupt to trigger a routine every 64 microseconds. But interrupts have a rather large overhead on the time scale we are working with here: registers have to be restored and jumping to and back from the interrupt routine takes time. This time I decided to do this more efficiently. I have written the video signal generation entirely in assembly and explicitly cycle counted the code so that each scanline takes exactly 1024 cycles to execute. After a scanline has been processed I can immediately begin generating the next scanline. A very nice thing with this approach is that I can keep important values such as line counters and memory pointers in registers all the time.

Every scanline begins with the HSYNC signal, which is 4.7us in length. At 16Mhz that is 75.2 cycles, so we round to 75 cycles. Then the back porch is 1.65us and rounded to cycles it becomes 26 cycles. In assembly we can cycle count and output the HSYNC and back porch in 75+26 cycles. Then we have exacly 1024-75-26 = 923 cycles left for the pixels. Let's round this to 900 cycles because we need some cycles for housekeeping stuff like incrementing the current line counter and jumping to the routine processing the next scanline. For e.g. 320 pixel horizontal resolution that would be only 900/320 = 2.8 cycles per pixel. Pulling a pixel from MCU's internal SRAM takes 2 cycles and outputting a pixel takes 1 cycle so at minimum we would need at least three cycles even when doing simple direct bitmapped graphics. Initially it seems there is no way get what we want with this microcontroller.

To make matters worse, a bitmapped image takes a lot of memory to store and is very heavy for the 6502 to process. That's why 6502 computers usually have a character based display mode, where the screen RAM contains indices or pointers to character data stored elsewhere in memory. For example, the screen of a C64 is divided into 40x25 characters and each character is 8x8 pixels. So for every 8th pixel the video generator has to fetch the character from screen RAM and then pixels from character memory. All this increases the cycle cost way higher than 3 cycles per pixel.

Attempt that almost worked

Luckily there is a faster way to get bits out of the ATmega1284P. The ATmega1284P has a built-in Serial Peripheral Interface (SPI) which is essentially a shift register whose clock frequency can be configured. The maximum rate for SPI is system clock divided by two, that is 8 MHz in our case. After the SPI has been initialized, a byte can be outputted by writing it to the SPI data register. The SPI hardware then shifts outs the bits at 8 Mhz, i.e. at 2 cycles per pixel. What's great is that the SPI runs independently so we can execute other instructions while the SPI is doing the transfer. Ok, I wired this up and wrote a scanline routine that pull a character from memory, fetches a byte encoding the 8 pixels of a character line and outputs the byte using SPI.

Initial results were very promising. I could get 320x256 resolution and even higher seemed possible. However, then I hit a major snag! See image below.

Argh, those black vertical gaps between characters!

These is a one pixel gap between every character. Even when I waited for exactly the right number of cycles, I got this gap or either corruption on the screen. I was pretty sure I was doing everything right and it felt like a hardware problem. Googling revealed a nightmare: this is a known hardware limitation, the SPI cannot send a continuous stream of bytes, apparently because there is no buffering. There is just a single register that gets shifted out and the hardware needs one extra cycle to load the shift register between transmits.

This was such a major setback. It seemed I would have to live with the gaps. This didn't seem like a good idea because I want to get nice character based graphics out of this thing eventually and having gaps there would certainly ruin it in a major way.

USART MSPI to the rescue!

I thought about using an external shift register as a workaround. A byte would be loaded one at a time using 8 parallel I/O pins (+ some control pins for clock signal et.), but I was already very tight on I/O pins so I couldn't afford this. I was really frustrated and considered even abandoning the idea of bitbanging the video signal using a MCU. But then after reading the datasheets carefully I learned there was another way: the built-in USART which could send data through the SPI, called the "USART in MSPI mode". The USART has a transmit buffer, so maybe the hardware could be the magic I needed to fix the gaps? A quick Googling seemed to indicate that this could be possible. So last night I make the necessary changes and nervously fired up my microcomputer... and huzzah, the gaps were gone!

With this victory under my belt, I optimized the code further. I could now output a 8 pixel wide character in just 16 cycles, including the screen RAM to character data indirection. With this I could extend lines to 50 characters, yielding a resolution of 400x256. The character generation now needs 50*16 = 800 cycles so there is still some time left. I could still extend the screen width a bit, but I'm going to settle for this nice round number for now.

You can find the source code of the project at GitHub. The screen contents is so far stored in ATmega's internal SRAM and completely static. Next I'm going to interface it with the 6502 and then the real fun can begin!

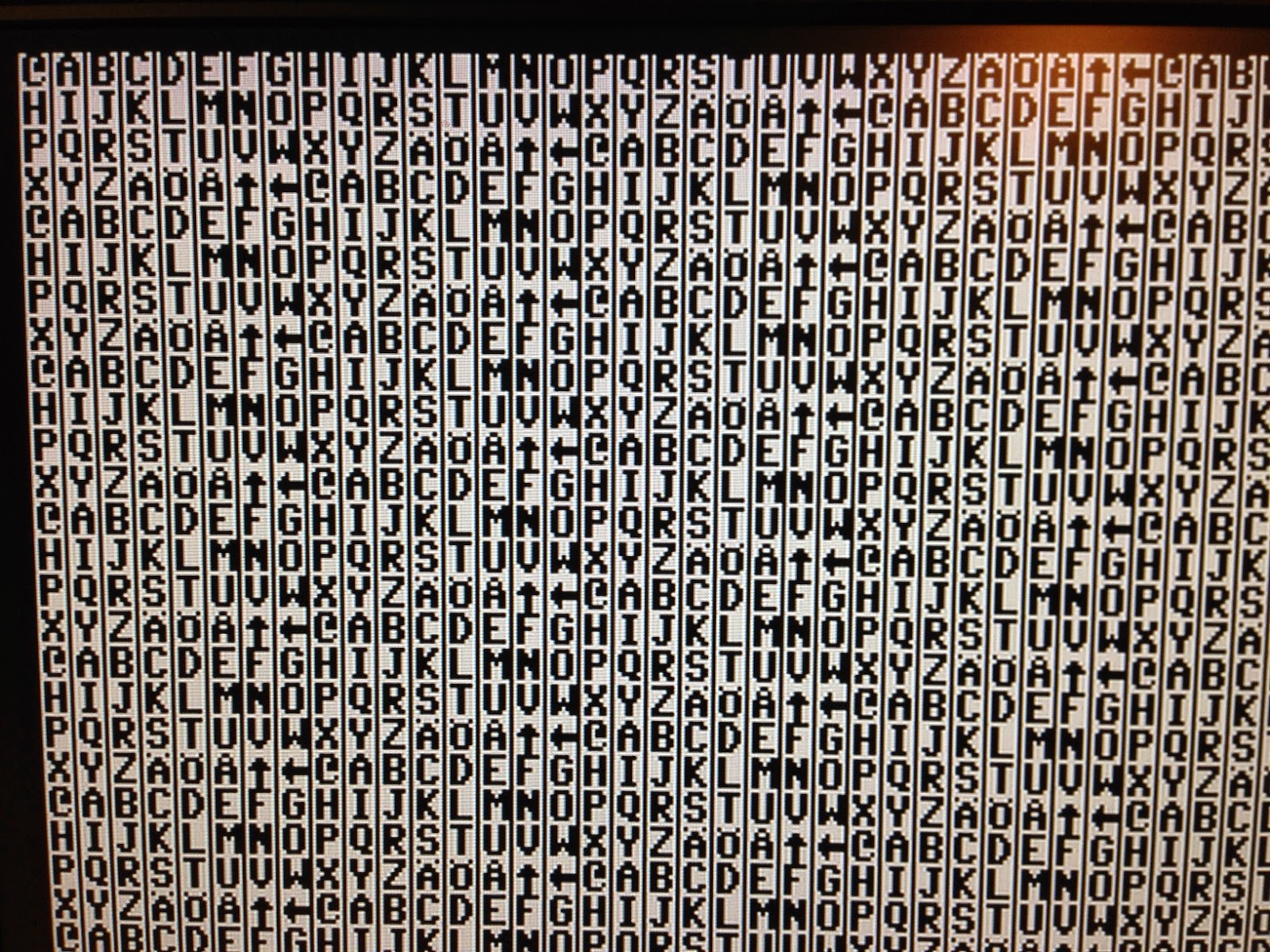

Finally here's a final gapless screenshot using a very familiar character set.

Hello

ReplyDeletepicture is not active , and delete from server. please cheek it